Geometric Gestures: Interactive Patterns

Exhibition: Technology Lights up Traditional Culture: Beijing Normal University School of Future Design PBL Project Exhibition

Award: The 7th Global Competition on Design for Future Education - Finallist

Conference: The First Global Heads Forum in Education and The Inauguration Conference of The Global Alliance of Educational Leaders and Department Heads (GAELDH) 2024

Overview

“Geometric Gestures: Pattern Interaction” is an innovative project that explores the synthesis of traditional geometric patterns with modern interactive technology. This initiative aims to bridge the gap between historical art forms and contemporary digital interfaces by allowing users to interact directly with geometric designs through intuitive gestures.

We extract patterns from the Forbidden City’s real scenes and abstract them into geometric shapes. Then, we design gestures to mimic these geometric shapes, allowing users to trigger introductions to the patterns by making the corresponding gestures.

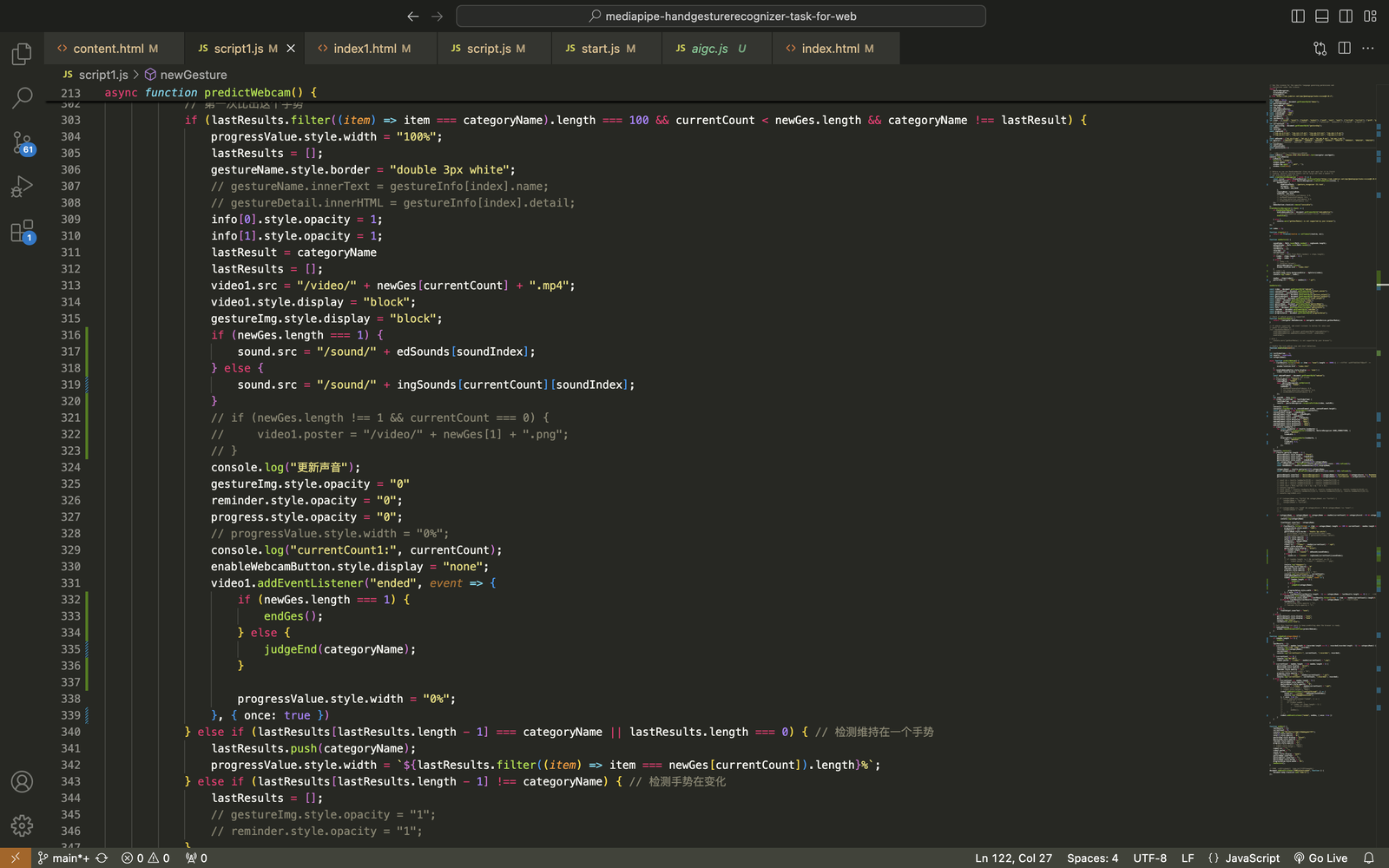

This project utilizes MediaPipe for its machine learning capabilities, enabling real-time gesture recognition and interaction within a web development framework. This allows users to dynamically interact with geometric patterns via simple hand gestures, directly in their web browser.

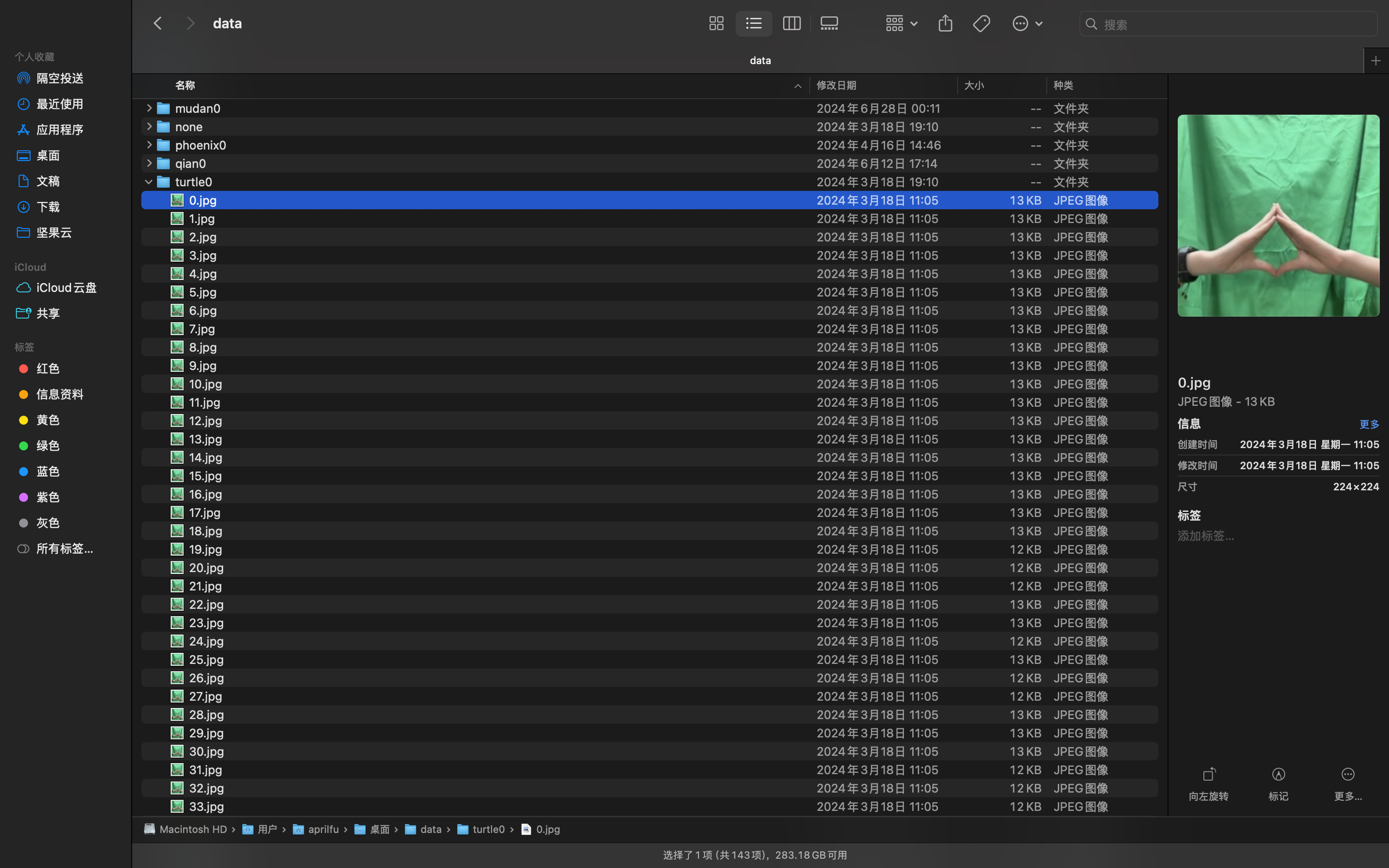

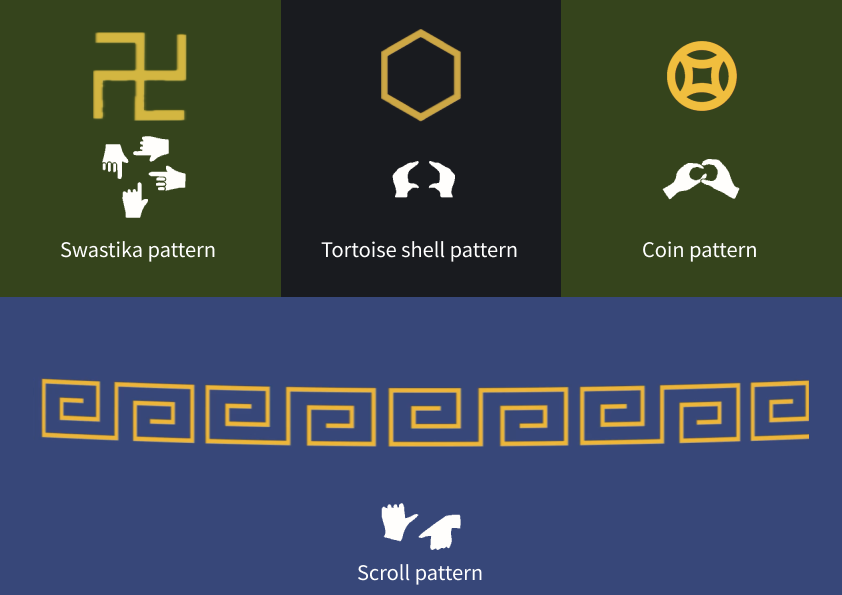

Gesture Design

We extract patterns from real scenes and transform them into geometric shapes. Then, we design gestures that mimic these shapes.

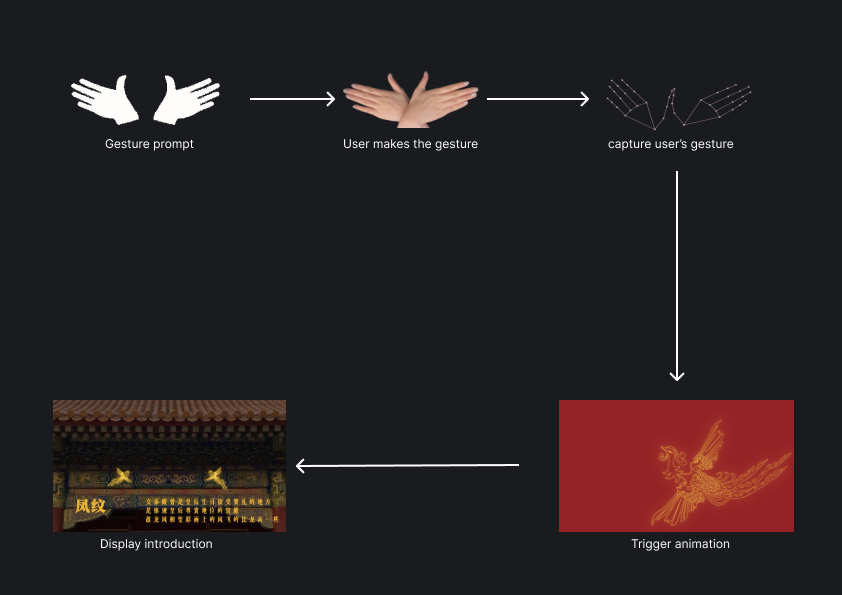

Interaction Flow

The audience can follow the on-screen instructions to perform gestures. When the correct gesture is detected, the introductory animation will be triggered.

Technical Implementation

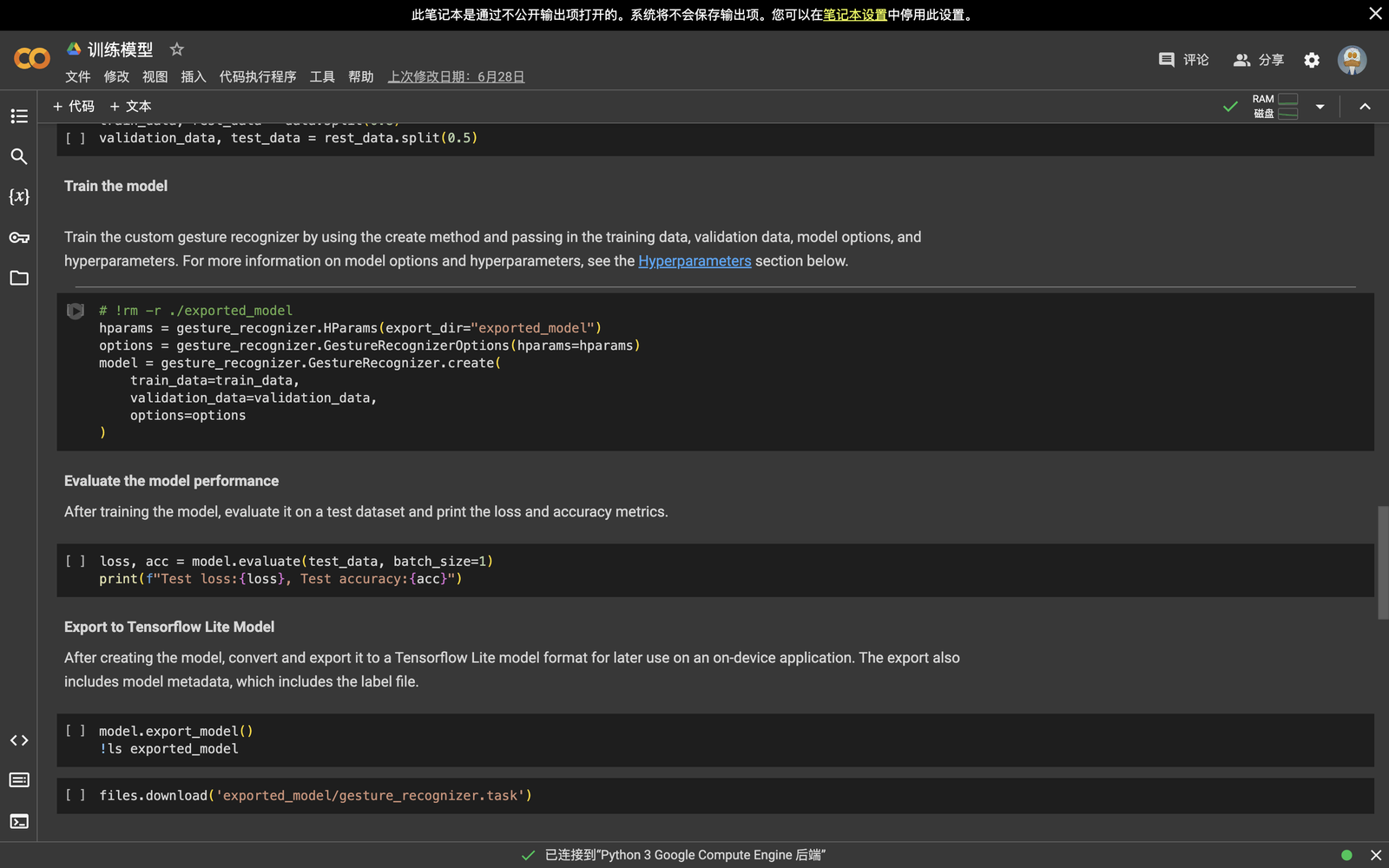

We manually collect the photos of gestures as our dataset. Then we use MediaPipe to train our model in Google Colab and export the model file to be called in the web development. Additionally, we have configured the program to restart automatically if no gestures are detected for 30 seconds.

Technical Stack

MediaPipe

Machine learning framework for real-time gesture recognition

Web Development

Browser-based interaction framework

Google Colab

Model training and development environment

Reflection

This project holds significant meaning for me as I conceptualized, developed, presented, and deployed it. When tasked with creating a work related to the patterns of the Forbidden City, I wanted to do something engaging. I was inspired by an animation created by my classmate, who is also the co-author of this work. I aimed to connect people with these beautiful, silent patterns. I wondered how people could interact with them and feel their essence. This led me to consider gestures as a means for people to understand the shapes of the patterns, while an accompanying animation could narrate their stories.